This year I've been demonstrating how easy it is to create modern web apps using AngularJS, Java and MongoDB. I also use Groovy during this demo to do the sorts of things Groovy is really good at - writing descriptive tests, and creating scripts.

Due to the time pressures in the demo, I never really get a chance to go into the details of the script I use, so the aim of this long-overdue blog post is to go over this Groovy script in a bit more detail.

Firstly I want to clarify that this is not my original work - I stole borrowed most of the ideas for the demo from my colleague Ross Lawley. In this blog post he goes into detail of how he built up an application that finds the most popular pub names in the UK. There's a section in there where he talks about downloading the open street map data and using python to convert the XML into something more MongoDB-friendly - it's this process that I basically stole, re-worked for coffee shops, and re-wrote for the JVM.

I'm assuming if you've worked with Java for any period of time, there has come a moment where you needed to use it to parse XML. Since my demo is supposed to be all about how easy it is to work with Java, I did not want to do this. When I wrote the demo I wasn't really all that familiar with Groovy, but what I did know was that it has built in support for parsing and manipulating XML, which is exactly what I wanted to do. In addition, creating Maps (the data structures, not the geographical ones) with Groovy is really easy, and this is effectively what we need to insert into MongoDB.

Goal of the Script

- Parse an XML file containing open street map data of all coffee shops.

- Extract latitude and longitude XML attributes and transform into MongoDB GeoJSON.

- Perform some basic validation on the coffee shop data from the XML.

- Insert into MongoDB.

- Make sure MongoDB knows this contains query-able geolocation data.

The script is PopulateDatabase.groovy, that link will take you to the version I presented at JavaOne:

Firstly, we need data

I used the same service Ross used in his blog post to obtain the XML file containing "all" coffee shops around the world. Now, the open street map data is somewhat... raw and unstructured (which is why MongoDB is such a great tool for storing it), so I'm not sure I really have all the coffee shops, but I obtained enough data for an interesting demo using

http://www.overpass-api.de/api/xapi?*[amenity=cafe][cuisine=coffee_shop]The resulting XML file is in the github project, but if you try this yourself you might (in fact, probably will) get different results.

Each XML record looks something like:

<node id="178821166" lat="40.4167226" lon="-3.7069112">

<tag k="amenity" v="cafe"/>

<tag k="cuisine" v="coffee_shop"/>

<tag k="name" v="Chocolatería San Ginés"/>

<tag k="wheelchair" v="limited"/>

<tag k="wikipedia" v="es:Chocolatería San Ginés"/>

</node>Each coffee shop has a unique identifier and a latitude and longitude as attributes of a node element. Within this node is a series of tag elements, all with k and v attributes. Each coffee shop has a varying number of these attributes, and they are not consistent from shop to shop (other than amenity and cuisine which we used to select this data).

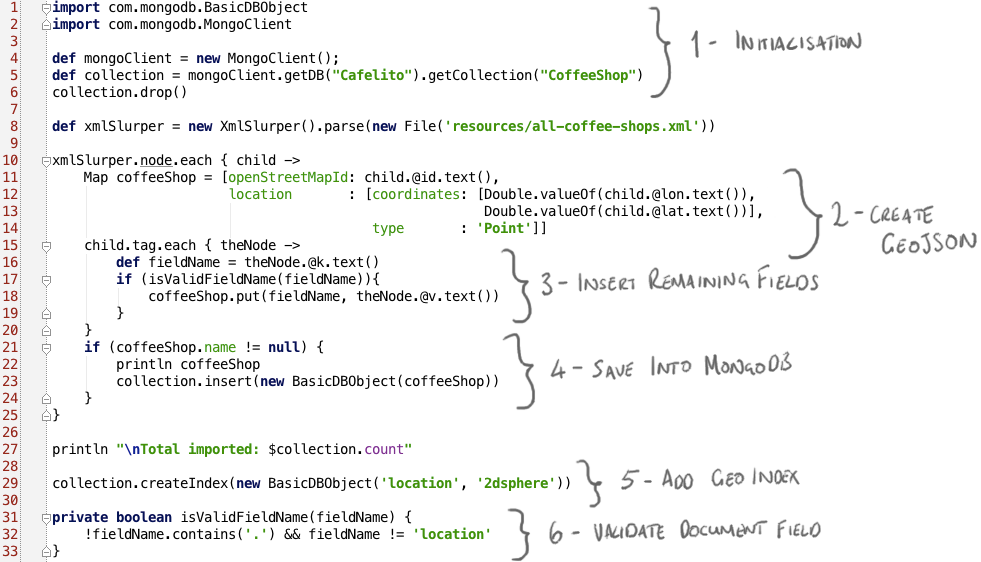

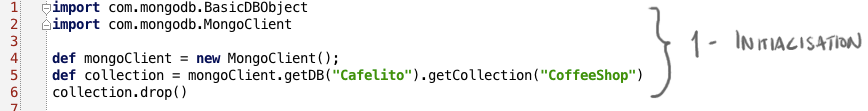

Initialisation

Before doing anything else we want to prepare the database. The assumption of this script is that either the collection we want to store the coffee shops in is empty, or full of stale data. So we're going to use the MongoDB Java Driver to get the collection that we're interested in, and then drop it.

There's two interesting things to note here:

- This Groovy script is simply using the basic Java driver. Groovy can talk quite happily to vanilla Java, it doesn't need to use a Groovy library. There are Groovy-specific libraries for talking to MongoDB (e.g. the MongoDB GORM Plugin), but the Java driver works perfectly well.

- You don't need to create databases or collections (collections are a bit like tables, but less structured) explicitly in MongoDB. You simply use the database and collection you're interested in, and if it doesn't already exist, the server will create them for you.

In this example, we're just using the default constructor for the MongoClient, the class that represents the connection to the database server(s). This default is localhost:27017, which is where I happen to be running the database. However you can specify your own address and port - for more details on this see Getting Started With MongoDB and Java.

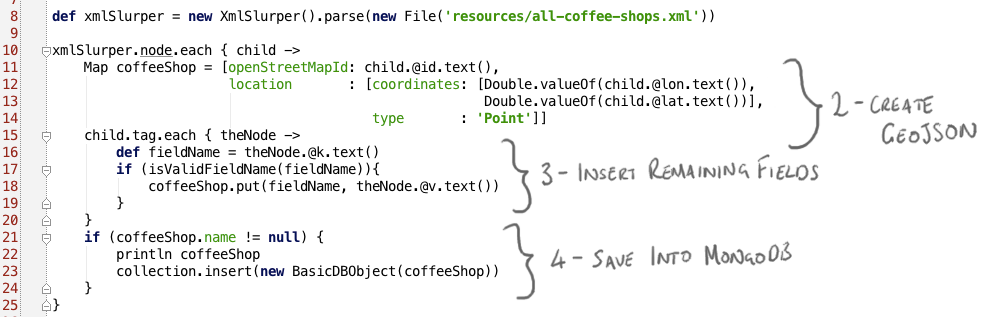

Turn the XML into something MongoDB-shaped

So next we're going to use Groovy's XmlSlurper to read the open street map XML data that we talked about earlier. To iterate over every node we use: xmlSlurper.node.each. For those of you who are new to Groovy or new to Java 8, you might notice this is using a closure to define the behaviour to apply for every "node" element in the XML.

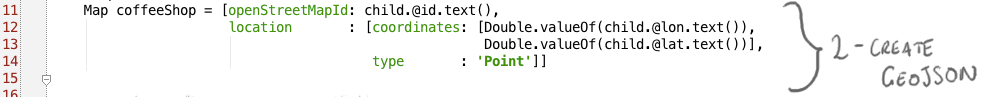

Create GeoJSON

Since MongoDB documents are effectively just maps of key-value pairs, we're going to create a Map

Since MongoDB documents are effectively just maps of key-value pairs, we're going to create a Map coffeeShop that contains the document structure that represents the coffee shop that we want to save into the database. Firstly, we initialise this map with the attributes of the node. Remember these attributes are something like:

<node id="18464077" lat="-33.8911183" lon="151.1958773">We're going to save the ID as a value for a new field called openStreetMapId. We need to do something a bit more complicated with the latitude and longitude, since we need to store them as GeoJSON, which looks something like:

{ 'location' : { 'coordinates': [<longitude>, <latitude>],

'type' : 'Point' } }In lines 12-14 you can see that we create a Map that looks like the GeoJSON, pulling the lat and lon attributes into the appropriate places.

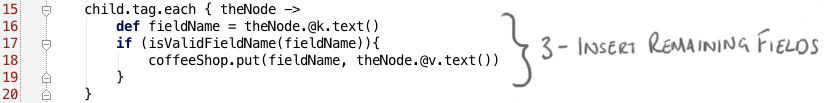

Insert Remaining Fields

Now for every tag element in the XML, we get the k attribute and check if it's a valid field name for MongoDB (it won't let us insert fields with a dot in, and we don't want to override our carefully constructed location field). If so we simply add this key as the field and its the matching v attribute as the value into the map. This effectively copies the OpenStreetMap key/value data into key/value pairs in the MongoDB document so we don't lose any data, but we also don't do anything particularly interesting to transform it.

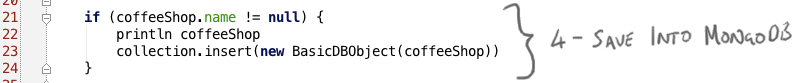

Save Into MongoDB

Finally, once we've created a simple coffeeShop Map representing the document we want to save into MongoDB, we insert it into MongoDB if the map has a field called name. We could have checked this when we were reading the XML and putting it into the map, but it's actually much easier just to use the pretty Groovy syntax to check for a key called name in coffeeShop.

When we want to insert the Map we need to turn this into a BasicDBObject, the Java Driver's document type, but this is easily done by calling the constructor that takes a Map. Alternatively, there's a Groovy syntax which would effectively do the same thing, which you might prefer:

collection.insert(coffeeShop as BasicDBObject)Tell MongoDB that we want to perform Geo queries on this data

Because we're going to do a nearSphere query on this data, we need to add a "2dsphere" index on our location field. We created the location field as GeoJSON, so all we need to do is call createIndex for this field.

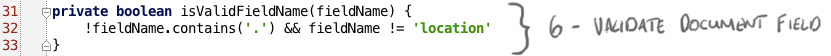

Conclusion

So that's it! Groovy is a nice tool for this sort of script-y thing - not only is it a scripting language, but its built-in support for XML, really nice Map syntax and support for closures makes it the perfect tool for iterating over XML data and transforming it into something that can be inserted into a MongoDB collection.